Presentation

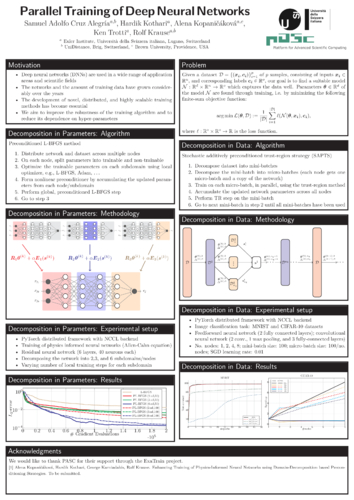

P47 - Parallel Training of Deep Neural Networks

Presenter

DescriptionDeep neural networks (DNNs) are used in a wide range of application areas and scientific fields. The accuracy and the expressivity of the DNNs are tightly coupled to the number of parameters of the network as well as the amount of data used for training. As a consequence, the networks and the amount of training data have grown considerably over the last few years. Since this growing trend is expected to continue, the development of novel distributed and highly-scalable training methods becomes an essential task. In this work, we propose two distributed-training strategies by leveraging nonlinear domain-decomposition methods, which are well-established in the field of numerical mathematics. The proposed training methods utilize the decomposition of the parameter space and the data space. We show the necessary algorithmic ingredients for both training strategies. The convergence properties and scaling behavior of the training methods are demonstrated using several benchmark problems. Moreover, a comparison of both proposed approaches with the widely-used stochastic gradient optimizer is presented, showing a significant reduction in the number of iterations and the execution time. In the end, we demonstrate the scalability of our Pytorch-based training framework, which leverages CUDA and NCCL technologies in the backend.

TimeTuesday, June 2719:30 - 21:30 CEST

LocationHall

SessionPoster Session and Reception

Event Type

Poster